As of May 13, 2024 Open AI has introduced another new model of the ever advancing artificial intelligence: “GPT-4o”. It seems like everyday there is a new development in the AI modern era we are in, with every company trying to stick their foot in the door. We quite literally have the elixir vitae in the palm of our hands; or, more accurately, the tap of a screen. But is this modern-day “Gold Rush” the start of a brilliant future, fostering intellect at every corner with new creative apparatuses, or the decline to individual thought and a one-way pitfall to stupidity? Where will the line be drawn between a “tool” and “substitute” in our discussion of AI? As we indulge further and further into technology, will we swim so far that we too disconnect?

Developers of AI software introduce the concept as a “foundational and transformational technology that will provide compelling and helpful benefits to people and society through its capacity to assist, complement, empower, and inspire people in almost every field of human endeavor.“; quoted by Google themselves in an introduction to their new “Gemini” virtual-assistant platform, similar to well known Chat GPT.

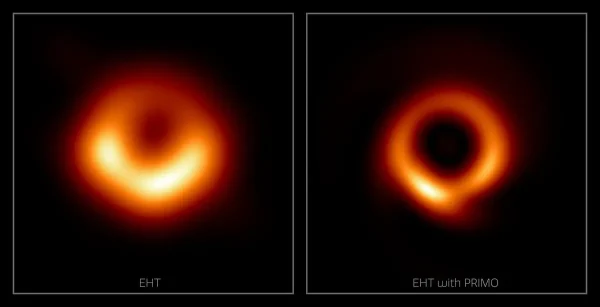

AI is being integrated into the hyper-technologized first worlds of today and supposedly serves its purpose as a tool to aid in pioneering intellectual discovery, with breakthroughs such as easing the data processing of astronomical pictures for astronomers (like with the reprocessing of the 2019 image of the galaxy Messier 87 (M87) black hole in 2023, resulting in a much clearer image of the galactic structure) exponentiating and quantifying results.

That being said however, the technology is more credibly used by the common-man to aid in undemanding daily tasks, sometimes answering the simplest questions. It has been a highly credulous source of plagiarism amongst students and teachers alike, with 54% of students using it “to help” on assignments.

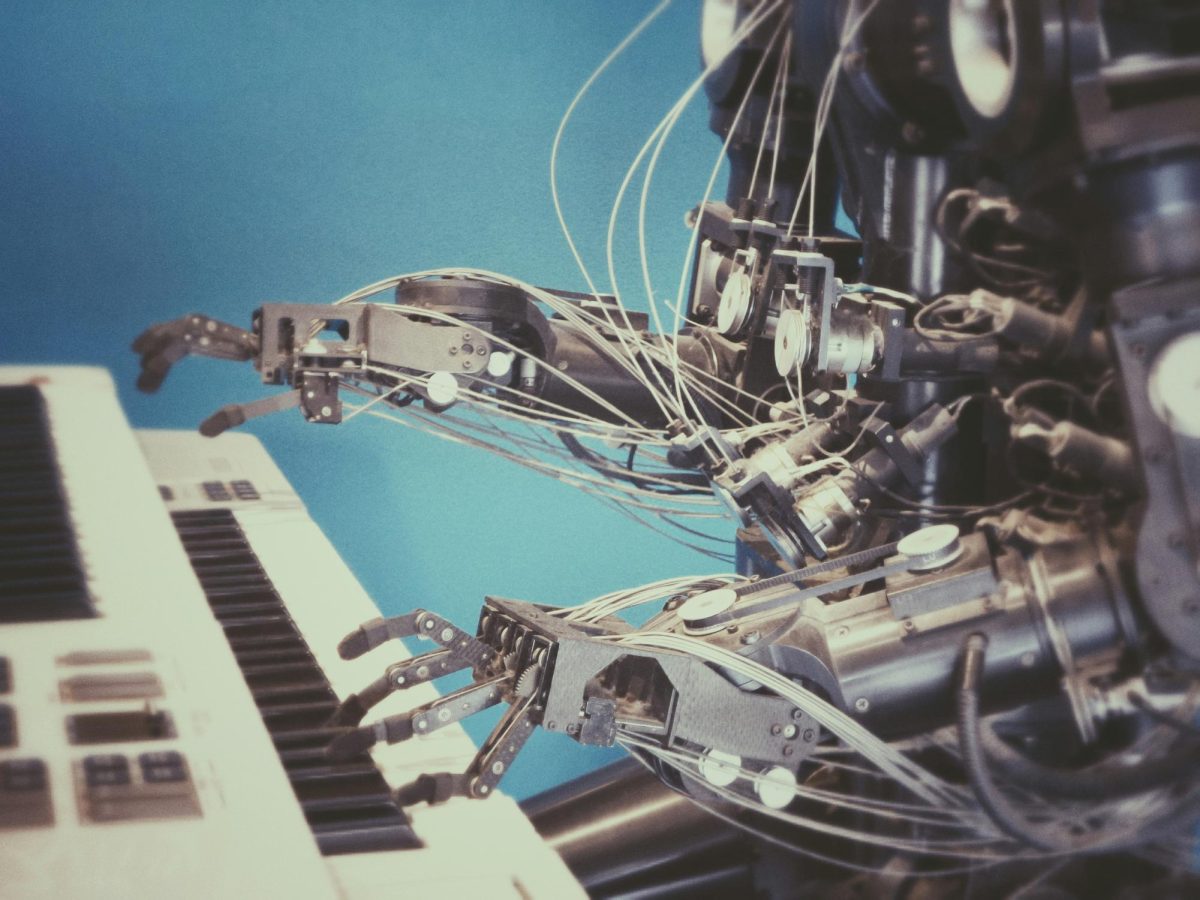

Similarly, a common contested issue surrounding AI, most notably its ties to the 2023 writers strike and job insecurity, is the issue between the technology and art, since some softwares (ie. Facetune), have the ability to alter and reproduce any piece of art it is fed. Artists fear that the technology’s ability to remix and/or reproduce its own artwork not only steals the intellectual and physical properties of someone else’s creation, but that credit will not be given where it is due. (the ironic de-aging of Harrison Ford’s in an Indiana Jones reboot speaks strongly on the issue.)

Dulging into the ethics of AI, (always rooting back to the 4 basic ethical principles) the technology does satisfy the principle of beneficence when viewed through the former lens of aiding in discovery and development: it is a tool that promotes the welfare of humanity through assistance by performing tasks unbeknownst and previously impossible for humans before. Despite this, it is more commonly argued that the technology actually violates beneficence for its antagonist, maleficence. So where does one interpretation falter while the other succeeds? In truth it is not a question of which one is wrong; but rather a realization of the complexity and duality of AI (one might even call it fake!). To do this we use the fourth ethical principle, autonomy to explain.

From the dawn of time man has advanced by way of technology; fire was the newest software for the caveman. Humanity has seen firsthand the ever-advancing pace our societies move in, and with that comes the ever-production of new technology. Modern technology has focused on easing the responsibilities of humans to make life easier, and AI is the newest means to do such. Respect for autonomy is usually neglected when discussing AI and its ethics, however it should really be the most applicable if analyzing the technology as a “tool” – whether for the better or worse.

As individuals we have a right to use any means at our disposal, under applicable contexts of course, so long as they (or our actions as a result) violate any ethical principle. The technology itself should even have rights if it is to be used in such the manner it is proposed to be.

It can be argued that nothing in the modern-day is original; every single thing that exists was built off of something else. If not a physical item, and idea; if not an idea, then an inspiration. Every Picasso had their Impressionism, just as every ChatGPT generated response had its own evaluated and modified previous prompted origin. Production is a pool of creativity, but not a means of originality. As we inevitably integrate the renowned technology of artificial intelligence into daily lives, it should have access to the intellectual discovery humans have made up until and even past this point. How can we expect it to serve as a tool to aid in the advancement of man if it is bound to its binary code, forever wired to its motherboard? AI is not a 1998 Dell desktop tower and needs to stop being treated as one. It is a harrowingly complex presence that is meant to change the world as we know it: it is meant to be neither man nor computer, instead a cryptic future we can only hope to trust in. Like it or not we are dependent on it for the future, but holding its hand will only hold back discovery and delay the advancement everyone is expecting.

Of course the issue of the technology is never black-and-white and would be ill advised to assume any absolute position over, as the future of AI is being written as we speak. There is still so much unknown about the technology and the effects it has/will have on mankind. However from analyzing the tech under an ethical standpoint, the blurry line it sways over between appropriate or not, tilts its weight the slightest bit more toward the “acceptable” side.